AWS S3 Bucket Security: The Top CSPM Practices

An S3 bucket is a fundamental resource in Amazon Web Services (AWS) for storing and managing data in the cloud. S3 stands for “Simple Storage Service,” providing scalable, durable, and highly available object storage.

S3 is widely used for various purposes, such as storing backups, hosting static websites, serving as a data lake for analytics, and acting as a primary storage solution and reliable object storage service at the core of many AWS solutions.

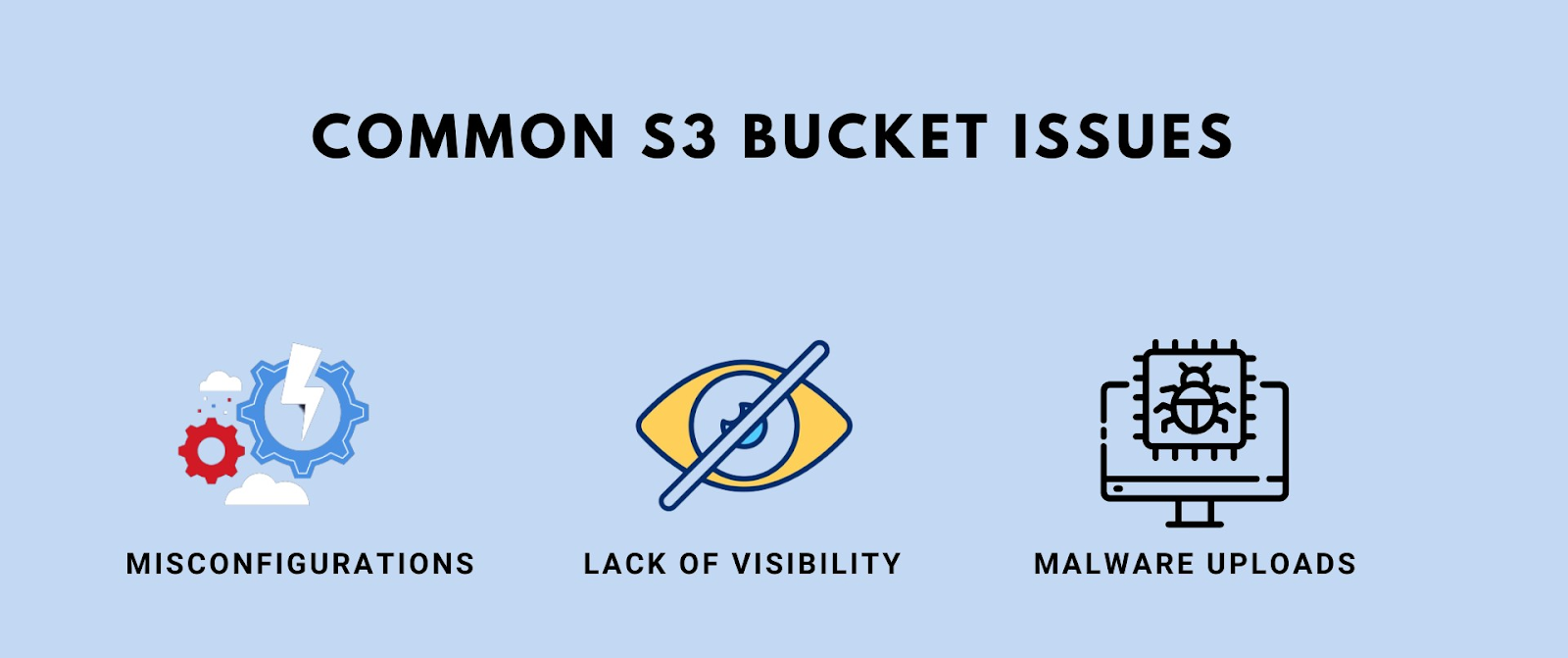

The Top S3 Bucket Risks and Issues

1. Unauthorized Access to Sensitive Data in S3 Buckets

Configuration errors in S3 settings can expose sensitive data. This oversight enables malicious users to gain unauthorized access to critical information. To maintain security, these configuration issues must be addressed.

2. Data Visibility & Protection Gaps in S3 Buckets

Ensuring visibility into S3 bucket contents and assessing protection measures are crucial. Insufficient oversight can lead to unforeseen risks. Regularly auditing data and security controls is essential to safeguard stored information.

3. Malware Upload Vulnerabilities in S3 Buckets

Configuration weaknesses may permit malware uploads into S3 buckets, creating a potential threat vector. Detecting and fixing these issues is vital to prevent further cyberattacks and protect your AWS infrastructure.

Best Practices for Effective CSPM of Amazon S3 Storage

While S3 is a powerful way to store data affordably and at scale, it can also be risky. The best practices can significantly enhance the security and compliance of your Amazon S3 buckets and reduce the risk of data breaches or incidents caused by misconfiguration.

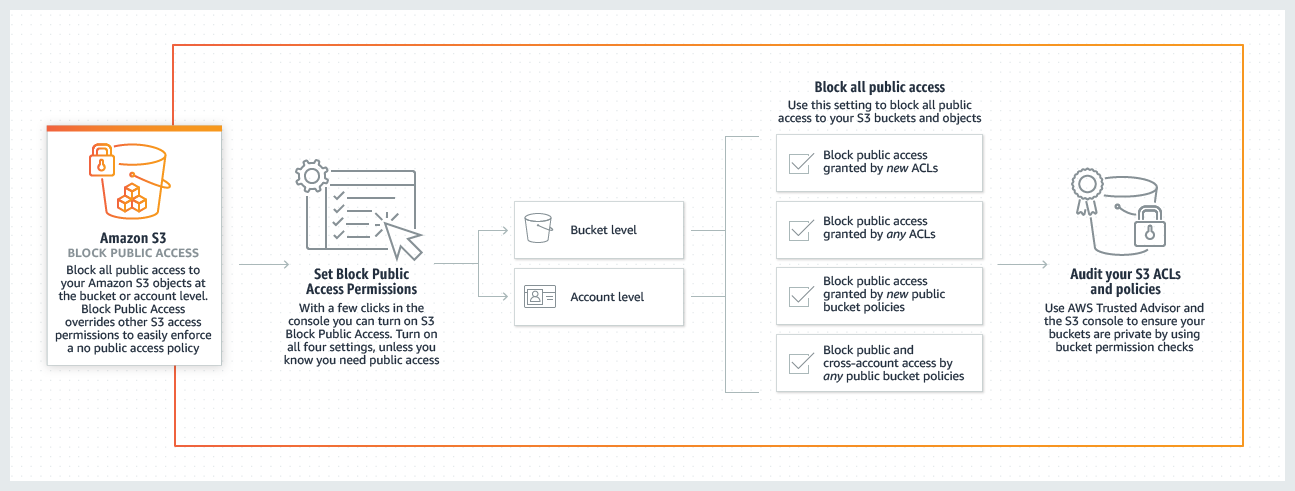

1. Configuring Bucket Permissions

Problem Statement: Inadequate or misconfigured permissions on S3 buckets can lead to a wide range of security and privacy issues. If bucket permissions are too permissive, unauthorized users or applications may gain access, potentially exposing sensitive data to unintended parties.

Explanation: The best practices for configuring bucket permissions are essential to maintain the confidentiality and integrity of your data. It ensures that only authorized users or applications have the necessary access to interact with objects in the S3 bucket, reducing the risk of data breaches, data leaks, or compliance violations.

- Use AWS Identity and Access Management (IAM) to grant the least privileged access to S3 buckets.

- Implement access controls using resource-based policies to restrict access to specific IP ranges or VPCs.

- Avoid using overly permissive ACLs (Access Control Lists) or policies.

- Ensure that your Amazon S3 buckets are not publicly accessible. Use S3 Block Public Access.

- Consider Amazon S3 pre-signed URLs or Amazon CloudFront signed URLs to provide limited-time access to Amazon S3 for specific applications.

2. Enable Logging

Problem Statement: Without comprehensive logging, it is challenging to track who is accessing your S3 buckets, what actions are being performed, and whether any security breaches or data leaks are occurring.

Explanation: Enabling S3 bucket logging serves as a critical component of your security and compliance posture. It allows you to monitor and audit access and actions, aiding in security incident investigations, compliance with data protection regulations, and accountability for any changes or activities within the bucket.

- Configure logging to store access logs in a separate S3 bucket with restricted access.

- Set up log file integrity validation to ensure logs haven’t been tampered with.

- Monitor log data for unusual patterns or potential security incidents.

- Establish a log retention policy to determine how long log data should be retained.

- Consider using centralized log management solutions like AWS CloudWatch Logs, AWS Elasticsearch, or third-party solutions.

3. Enable Versioning

Problem Statement: In the absence of versioning, accidental or malicious data deletions or overwrites may result in permanent data loss with no recovery option. This could be catastrophic for businesses that rely on historical data for decision-making, auditing, or regulatory compliance.

Explanation: Enabling versioning is a fundamental data protection mechanism. It creates a historical record of all object versions, allowing you to recover previous versions if data is inadvertently modified or deleted.

- Create and enforce a versioning policy for all S3 buckets within your organization.

- Implement versioning with MFA (Multi-Factor Authentication) delete protection for critical data.

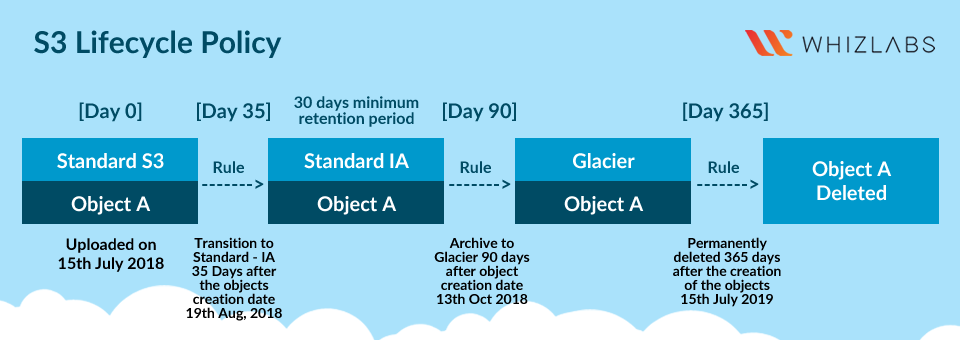

- Automate the cleanup of outdated versions using lifecycle policies.

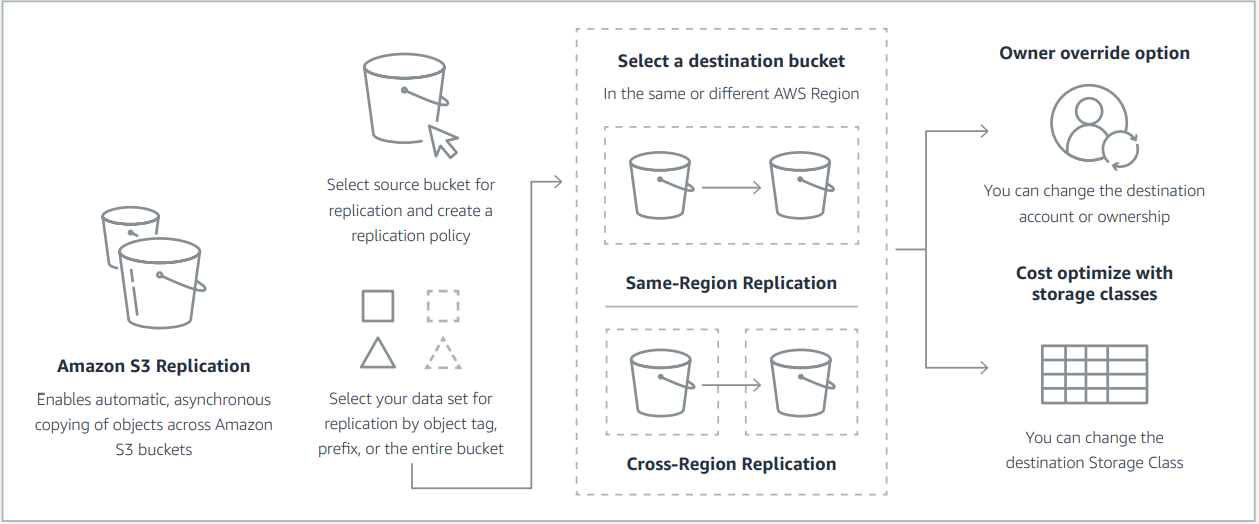

- Consider S3 Replication to different AWS accounts to protect your data and remain compliant

4. Data Encryption

Problem Statement: Storing unencrypted data in S3 exposes it to potential data breaches, especially during data transfers or in cases of unauthorized access. In today’s data-driven world, data breaches can result in significant financial and reputational damage.

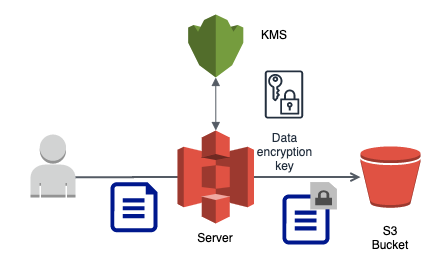

Explanation: Enforcing data encryption, both in transit and at rest, is a fundamental practice to safeguard sensitive information. It protects your data from unauthorized access, ensuring that even if data is intercepted or accessed without permission, it remains indecipherable to malicious actors, significantly reducing the risk of data compromise and data leaks.

- Enable default encryption for the entire S3 bucket to ensure consistency.

- Use AWS Key Management Service (KMS) to manage encryption keys for SSE (Server-side encryption)-KMS.

- Implement client-side encryption for additional security when uploading data

- Enforce encryption-in-transit for access to Amazon S3

5. Monitoring, Auditing, and Alerts

Problem Statement: Without continuous monitoring and auditing, it’s challenging to detect and respond to suspicious activities and security incidents on time. This can lead to prolonged security breaches and increased damage.

Explanation: Implementing robust monitoring, auditing, and alerting mechanisms is a proactive approach to cybersecurity. It enables you to identify and respond to security threats in real time, reducing the potential impact of breaches and helping to maintain data confidentiality, integrity, and availability.

- Implement automated alerting systems to promptly detect and respond to any unauthorized access attempts or configuration alterations.

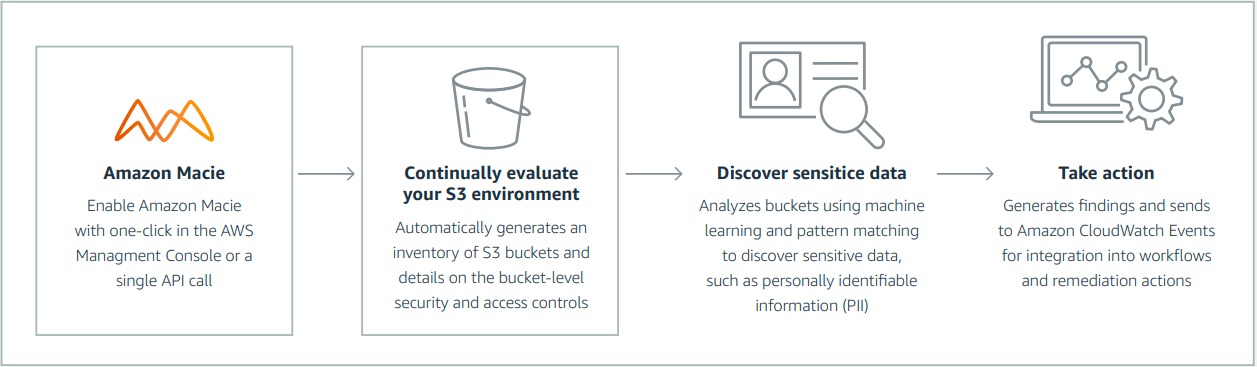

- Deploy Amazon Macie to enhance data security visibility and enable proactive monitoring of your data security posture.

- Employ Amazon S3 Inventory to conduct audits and generate reports regarding the replication and encryption status of your objects

- Utilize the Tag Editor to categorize resources as security-sensitive or audit-sensitive.

6. Access Control Lists (ACLs) & Bucket Policies

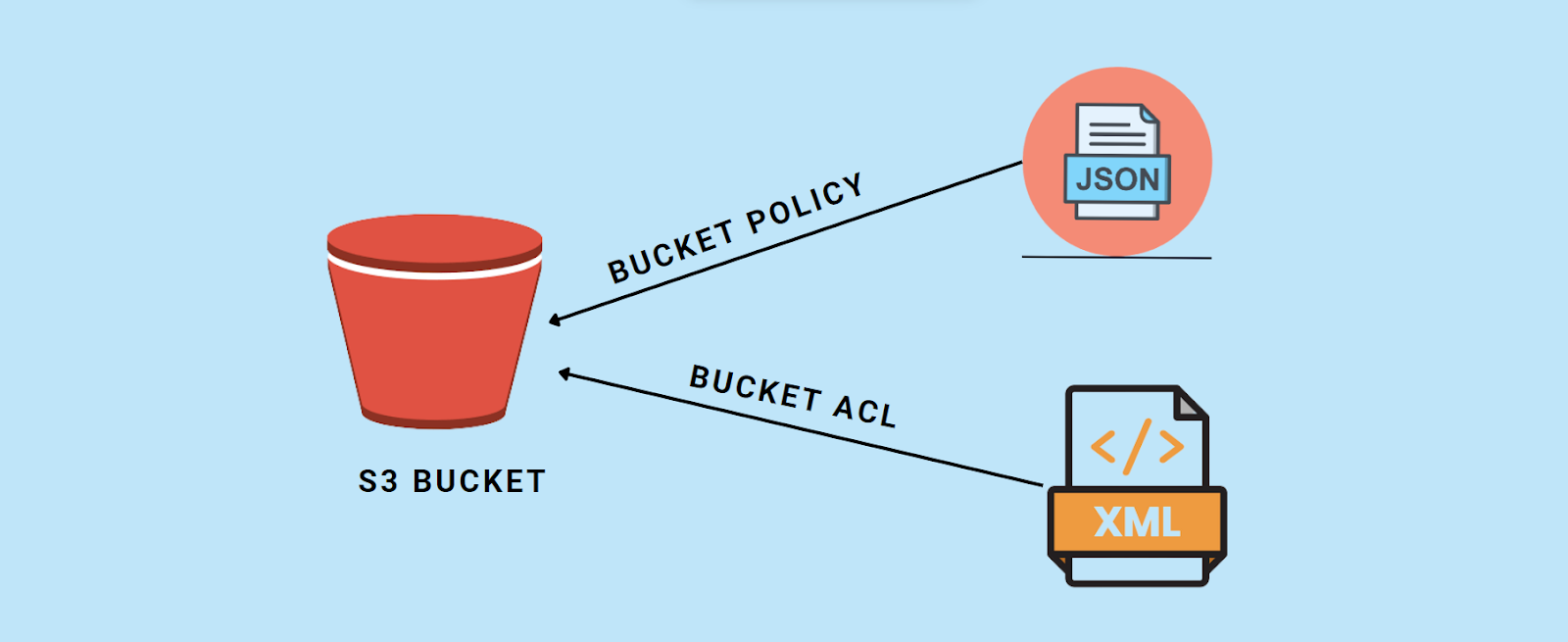

Problem Statement: Misconfigured or overly permissive ACLs and bucket policies can lead to unauthorized data access and data exposure, which can result in data breaches and compliance violations.

Explanation: Properly defining ACLs and bucket policies is essential to control access to your S3 resources granularly. These mechanisms ensure that only authorized users, applications, or systems have the necessary permissions to interact with your S3 objects, reducing the risk of data exposure and unauthorized access.

- Maintain a central repository of ACLs and bucket policies for documentation and version control.

- Avoid setting public-read or public-read-write ACLs unless it’s explicitly required for your use case. Public access should be carefully controlled.

- Regularly review and test policies to ensure they function as intended.

- Implement policy conditions like MFA or IP restrictions for sensitive buckets.

- Disable ACLs, except in unusual circumstances where you must control access for each object individually.

7. AWS Organizations

Problem Statement: Managing AWS resources in a disorganized or uncoordinated manner can lead to security gaps, compliance issues, and inefficient resource allocation. It can be challenging to maintain a clear picture of resource usage and access across a complex AWS environment.Explanation: AWS Organizations provides a framework for centralizing resource management, simplifying billing, and creating account hierarchies. This approach helps ensure consistent security policies, efficient resource allocation, and improved visibility across your organization’s AWS infrastructure, reducing the risk of security gaps and compliance violations.

- Centralize S3 bucket security settings and monitoring across multiple AWS accounts using AWS Organizations.

- Use AWS Organizations to simplify billing and cost management for S3 storage.

- Implement AWS Service Control Policies (SCPs) to enforce security policies consistently.

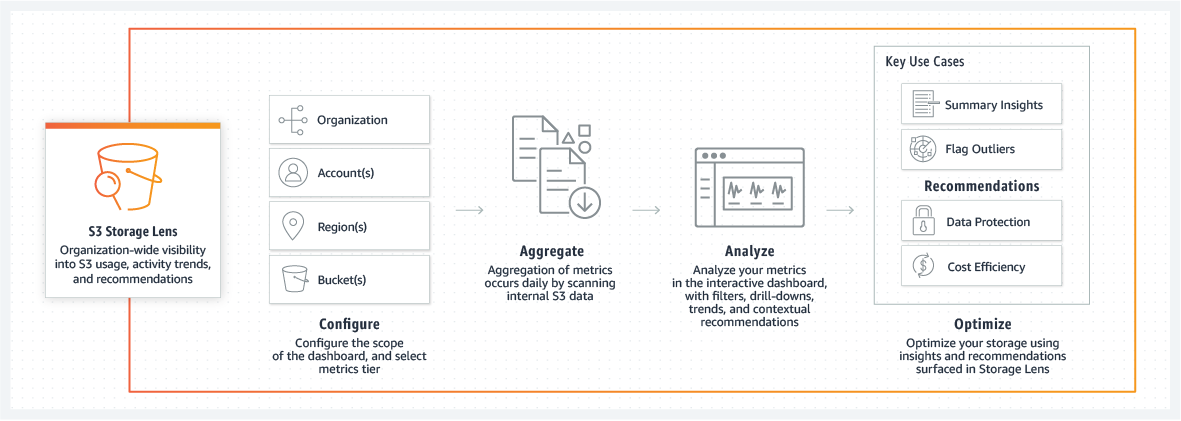

- Utilize S3 Storage Lens metrics to quickly gain insights into your organization’s storage usage, including identifying the fastest-growing buckets and prefixes.

8. Backup and Disaster Recovery

Problem Statement: Without a solid backup and disaster recovery strategy, data may be permanently lost due to data corruption, accidental deletion, hardware failures, or natural disasters, resulting in financial losses and disruptions to business operations.

Explanation: Implementing a robust backup and disaster recovery plan is vital for data resilience and business continuity. It ensures that data can be recovered and restored in case of unforeseen incidents, reducing the risk of data loss, downtime, and the associated financial and operational impacts.

- Create and maintain off-site backups of critical data to ensure data recovery in case of data loss.

- Test disaster recovery procedures to verify data can be restored successfully.

- Implement versioned backups to have historical data snapshots available for recovery.

- Set up cross-region replication for critical data to create a geographically redundant copy of your S3 objects in another AWS region.

- Define backup frequency based on your Recovery Point Objective (RPO). Critical data might require more frequent backups than less critical data.

9. Data Classification and Object Lifecycle Policies

Problem Statement: Unmanaged data often leads to the accumulation of unnecessary and potentially sensitive information, increasing storage costs and the risk of compliance violations. Inefficient data management can also hinder data retrieval and analysis efforts.

Explanation: Implementing data classification and object lifecycle policies helps efficiently manage your data throughout its lifecycle. It enables you to identify, classify, and automate the management of data, reducing storage costs and ensuring compliance with data retention requirements. Efficient data management also supports better data analysis and retrieval.

- Assign metadata tags to objects to denote their sensitivity levels and identify their respective data owners.

- Implement data loss prevention (DLP) policies to prevent unauthorized sharing of sensitive data.

- Archive objects to more cost-effective storage classes such as S3 Glacier Instant Retrieval, S3 Glacier Flexible Retrieval, and S3 Glacier Deep Archive for data that doesn’t necessitate frequent access.

- Define clear object lifecycle policies in accordance with data retention requirements to ensure proper data management.

- Delete objects securely, implementing rigorous verification mechanisms to guarantee their complete and secure removal.

10. Documentation and Training

Problem Statement: The lack of proper documentation and training can result in misconfigurations, security lapses, and inefficient use of S3 resources. It can lead to confusion among team members and a lack of consistent best practice adherence.

Explanation: Providing comprehensive documentation and training is essential to ensure that your team understands and follows best practices for AWS S3 bucket management. It promotes consistency, reduces the likelihood of errors, and helps teams work together effectively, ultimately leading to more secure and efficient S3 bucket management.

- Create and maintain comprehensive documentation of S3 bucket security configurations.

- Maintain an inventory of all S3 buckets, including their purpose, data classification, and responsible owners.

- Provide regular security training for your team to stay updated on AWS security best practices.

- Encourage a culture of security awareness and responsibility within your organization.

- Keep documentation up-to-date as your AWS environment evolves, and review it regularly to incorporate lessons learned and best practices.